Within Databricks, Dashboards are frequently used to surface data to this audience and provide quick internal visibility.

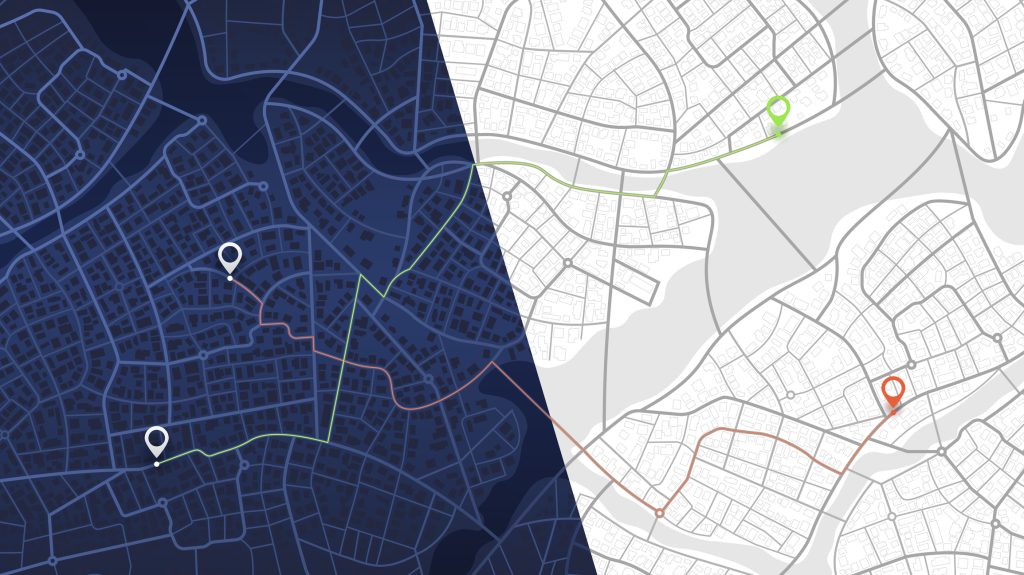

However, anyone who has relied on Databricks Dashboards as a presentation layer will be familiar with their limitations. Interactivity is constrained, large datasets are often truncated, version control is minimal, cross-dashboard interactions are not supported, and geospatial visualisation options are fairly limited and difficult to customise.